Advertisement

Grab your lab coat. Let's get started

Welcome!

Welcome!

Create an account below to get 6 C&EN articles per month, receive newsletters and more - all free.

It seems this is your first time logging in online. Please enter the following information to continue.

As an ACS member you automatically get access to this site. All we need is few more details to create your reading experience.

Not you? Sign in with a different account.

Not you? Sign in with a different account.

ERROR 1

ERROR 1

ERROR 2

ERROR 2

ERROR 2

ERROR 2

ERROR 2

Password and Confirm password must match.

If you have an ACS member number, please enter it here so we can link this account to your membership. (optional)

ERROR 2

ACS values your privacy. By submitting your information, you are gaining access to C&EN and subscribing to our weekly newsletter. We use the information you provide to make your reading experience better, and we will never sell your data to third party members.

Publishing

It’s time for positive action on negative results

Perspectives: Structural biologist suggests that chemists can help redefine good research behavior by telling the whole story

by Stephen Curry

March 7, 2016

| A version of this story appeared in

Volume 94, Issue 10

Publish or perish. The reality of research is not as brutal as this infamous dictum might suggest. But even though your life may not be at stake, your livelihood probably is if you don’t comply with the norms of the scientific world.

It is reasonable to expect researchers to produce papers. Yet, as the audit culture that has flooded many areas of human activity soaks into the fabric of academia, researchers are increasingly immersed in the metric tide. Papers have come to mean accumulating points: impact factors, citation counts, h indexes, and university league table rankings. We now find ourselves subjugated to a system of incentives that is inefficiently geared toward the engine of discovery and communication. Let’s face it, the publication system is misfiring.

No one intended for things to turn out this way. But there is a widespread sense that we have gotten lost. When that happens, it is sensible to pause and take stock.

Curry

Stephen Curry is a professor of structural biology at Imperial College London, where he studies the replication mechanisms of RNA viruses. He also writes regularly on science and the scientific life at the Guardian and his Reciprocal Space blog.

What is the purpose of a research publication? For sure it is to claim priority and demonstrate originality. We should not be squeamish about the egocentric forces at play here. Publishing also serves, ideally, to map out the territory of our understanding and to inform others so that guided by earlier findings we might penetrate deeper in subsequent forays.

The researchers with the biggest discoveries, however, win the most space in journals, which are keen to burnish their reputations as the repositories of great scientific advances. The reports of those who return empty-handed from the lab, however smart or well-crafted the experiment, rarely make it to the pages of the scientific literature.

And that’s a problem. It creates a publication bias that fills the literature with positive findings by systematically excluding negative results. No one likes negative results or seeks them out, regardless of what science philosopher Karl Popper has told us about the value of falsifying hypotheses rather than proving them.

Nevertheless, negative results do provide a useful guide for ideas and experiments that have been tried and found wanting. By not publishing what didn’t work, we condemn our colleagues to inefficiency, keeping them in ignorance of the lessons we have learned.

We also run the risk of undermining public trust if we fail to provide a full account of research that is, for the most part, publicly funded. In an open society, it is in the interest of the research community to be up front about the fallible side of science. Worse still, as the AllTrials campaign to ensure the registration and full reporting of all clinical trials shows, selective publication of positive results of trials of new drugs and treatments can do real harm. People die, as was revealed in the multi-billion-dollar lawsuits against Merck & Co. over its Vioxx painkiller and GlaxoSmithKline over the diabetes drug Avandia.

Until recently, the reasons for not publishing negative results were clear. Publishers don’t like them because they don’t attract attention or citations and so threaten journal impact factors. And reviewers, typically asked to gauge the significance of a piece of work, have become conditioned to see importance more readily in positive outcomes.

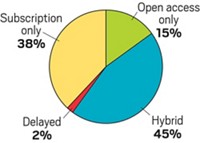

It doesn’t have to be this way. The open access movement has given rise to new models of publication that judge research work not on significance but solely on originality and competence. That is now giving us new avenues for publishing negative results, such as PLOS One, F1000 Research, Peer J, Scientific Reports, and the recently announced ACS Omega. Uptake of these new author-pays megajournals should grow as they compete on price for the services offered to researchers.

It was the competitive rates offered by that induced me to publish negative results from my laboratory for the first time last year, work on the crystal structure of a norovirus protease. Prior to that, our failed experiments languished in laboratory notebooks, increasingly forgotten as students and postdocs rotated through the lab. There seemed to be no way to get them published and no reason to do so.

The rise of free preprint archives in the life sciences, such as bioRxiv, has also caught my attention and arguably creates an appealing venue for ensuring that negative results can serve as signposts for other researchers. Such efforts are not peer-reviewed, but after 20 years, the arXiv preprint repository used by various disciplines of physics, mathematics, and computer science shows that they can provide a valuable resource. All we need now is a preprint archive for chemists and chemical engineers.

That said, it will take more than technological or editorial changes to properly reform scientific publishing. For one thing, confusion about open access abounds. The author-pays model of open access journals has stirred concerns about quality control that need to be addressed. In practice, these can be tackled by clear separation of editorial decision-making from questions of payment, encouragement of postpublication review (all the more effective thanks to the worldwide readership offered by open access), and provision of clear advice on journal quality through publication services such as Think. Check. Submit. and the Directory of Open Access Journals.

We also need to redefine good researcher behavior and incentivize it. At present the focus is on publishing “well.” But we need to be more holistic in determining what that means, particularly if we want to retain the trust of funders and the public. We should find ways to reward researchers who publish high-quality research that is reproducible and who publish rapidly, openly, and completely, including all their data and negative results. Publishing will always be an ego trip. But it is also a professional duty owed to our colleagues and to the wider world.

It Didn’t Work: Have you published negative results? If so, tell us where you published them and share the impact they had below:

Views expressed on this page are those of the author and not necessarily those of ACS.

Join the conversation

Contact the reporter

Submit a Letter to the Editor for publication

Engage with us on Twitter