Advertisement

Grab your lab coat. Let's get started

Welcome!

Welcome!

Create an account below to get 6 C&EN articles per month, receive newsletters and more - all free.

It seems this is your first time logging in online. Please enter the following information to continue.

As an ACS member you automatically get access to this site. All we need is few more details to create your reading experience.

Not you? Sign in with a different account.

Not you? Sign in with a different account.

ERROR 1

ERROR 1

ERROR 2

ERROR 2

ERROR 2

ERROR 2

ERROR 2

Password and Confirm password must match.

If you have an ACS member number, please enter it here so we can link this account to your membership. (optional)

ERROR 2

ACS values your privacy. By submitting your information, you are gaining access to C&EN and subscribing to our weekly newsletter. We use the information you provide to make your reading experience better, and we will never sell your data to third party members.

Computational Chemistry

Chemistry By The Numbers

From data collection and analysis to quantum calculations, computers have revolutionized chemistry

by Mitch Jacoby

September 9, 2013

| A version of this story appeared in

Volume 91, Issue 36

It’s hard to overestimate the impact of computer-based calculations on chemistry. Even if measured strictly by the importance of quantum mechanical calculations, which many chemists consider synonymous with computational chemistry, the results would be staggering. Scientists depend on computerized quantum calculations to probe and understand properties of molecular systems in every area of chemistry.

For decades, researchers have relied on these methods to explore the energetics, structure, reactivity, and other properties of molecules. More recently, quantum methods and computers have become powerful enough for computational chemistry to tackle complex molecular systems in biochemistry, pharmaceutical chemistry, catalysis, and materials chemistry, including relatively large entities such as nanostructured materials.

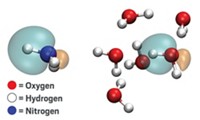

But researchers use computers to do so much more. They use them to store, examine, and sift through enormous data sets in search of hidden connections, trends, and chemical relationships. They use them to rapidly convert measured quantities to mathematically related ones and to automatically display the results in graphically intuitive forms. Nowadays, scientists routinely study chemistry by displaying and rotating accurate three-dimensional renderings of molecules on biological surfaces and in other complex environments—and they do so with ease.

Compared with the state of affairs 50 or more years ago, “computers have completely transformed chemistry research,” says Northwestern University’s George C. Schatz, a veteran computational scientist. “Computers are simply part of the fabric of chemistry today.”

It used to be a huge job to collect data manually and crunch numbers to get the quantities needed to evaluate experimental results, Schatz explains. “Now we hardly even think about it” because those operations are done automatically by a computer hidden in an instrument. Computers have majorly expedited chemical analysis by enabling even nonspecialists to quickly carry out data workup procedures, which used to be tedious and labor-intensive.

Similarly, quantum mechanical methods have become more common and user-friendly in the past two decades while becoming more powerful. Quantum methods used to lie exclusively in the domain of theoreticians. No longer.

Quantum calculations have become accessible to many researchers, says Peter J. Stang, a chemistry professor at the University of Utah and editor-in-chief of the Journal of the American Chemical Society. “These days, more and more papers submitted to JACS include such calculations,” he asserts.

One reason for the popularity is the wide availability of quantum mechanics computer programs. When University of Georgia, Athens, quantum chemist Henry F. (Fritz) Schaefer III was doing graduate work in quantum mechanics in the 1960s, these programs weren’t available. “We had to write the codes ourselves in those days, and there were very few people to turn to for help,” he recalls. Now many quantum programs are free and work well, he says. In many cases, scientists using computational tools are experimentalists who use quantum calculations to enhance their studies, just like using one more method to probe a chemical system and bolster a scientific argument.

It wasn’t always that easy. Like C&EN, quantum mechanics is roughly 90 years old. In the field’s early days, physicists manually calculated electron energies and other properties of simple entities, such as the hydrogen atom. Complex systems—such as atoms larger than hydrogen and small molecules—remained out of reach of quantum mechanics until the 1940s and 1950s, when computers were developed.

Quantum chemistry—the application of quantum mechanics to molecular systems—had to wait for computers powerful enough to solve the so-called many-body problem, which describes how atomic particles affect each other. For these systems, the Schrödinger equation, the solution of which would provide a wealth of chemical information, cannot be solved directly by calculus.

In the early days of computers, researchers figured out ways to get around the problem. They developed approximations to, and simplifications of, the theoretically rigorous forms of quantum mechanical equations and exploited the ability of computers to rapidly carry out large numbers of calculations. They showed that computerized quantum methods, in principle, could provide information of real value to chemists.

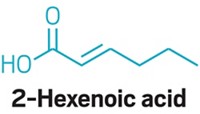

For example, the methods could be used to calculate molecular geometries, reaction energies, and reaction rates. They could also be used to determine reaction barrier heights and vibrational frequencies, some types of spectra, and many other molecular properties, including some that could not be measured in a laboratory.

That tantalizing possibility meant that computations not only could help explain experimental results, such as tough-to-interpret spectra, but also had predictive power. Computations could predict experimental outcomes and could describe properties of as-yet-unsynthesized or -unexplored molecules and materials.

During the first few decades of computers, these methods could handle the smallest molecules. Through better approximations and more efficient computer procedures for multistep calculations, quantum aficionados slowly built a large collection of computer-based techniques and expanded their reach. Some of the strategies, those that simplify the mathematics by including experimental parameters, are known as empirical or semiempirical methods.

Another group of calculation techniques are called ab initio methods, “ab initio” meaning “from the beginning” or “from first principles.” These do not include experimental input and tend to be more accurate than empirical methods but nonetheless also rely on simplifications. For example, they may sidestep the many-body problem, which is difficult to solve, by accounting for electron-electron repulsion in an average way. Perhaps the most common ab initio methods today are those based on density functional theory (DFT), which simplifies the many-body problem in a way that depends on the spatial distribution of electron density in a molecular system.

Because all methods invoke approximations, some error—differences between calculated and the best measured values—remains. And a key computational chemistry challenge has not changed since the field’s early days: to simplify calculations enough to make them solvable but ensure that results are accurate enough to correctly predict the physical and chemical properties of target molecules.

One molecule that sparked debate in theoretical and experimental circles for years is CH2, the methylene radical. Results from ab initio quantum studies by Schaefer and others in the early 1970s differed markedly from laboratory measurements. For example, spectroscopy work in the 1960s and 1970s indicated that the molecule is linear—that is, that the H–C–H angle is 180°. Computations predicted it was bent, with a 135° bond angle. Computation and experiment also differed widely regarding the energy difference between CH2’s singlet and triplet electronic states.

After much back and forth between teams of experimentalists and theoreticians, the computed values for CH2 turned out to be correct, as verified by new and improved experiments. Schatz notes the benefit of the prolonged debate: “This kind of interplay between theory and experiment drove both sides to improve their methods,” he says.

By around 1980, theoreticians had moved on to studying chemical reactions of larger molecules, naphthalene (C10H8) for example, aided by powerful computers such as Digital Equipment Corp.’s then popular VAX-11/780. That computer boasted much faster processing speeds and far greater memory than its predecessors and received data and instructions via monitors and keyboards, not the punch cards and card readers used with previous systems.

\

\

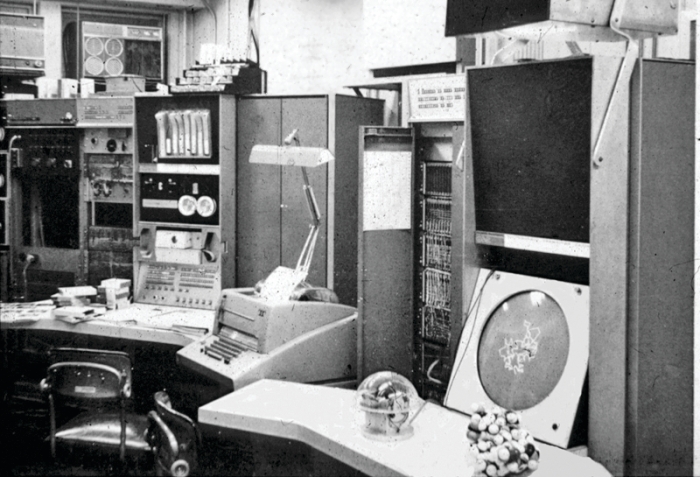

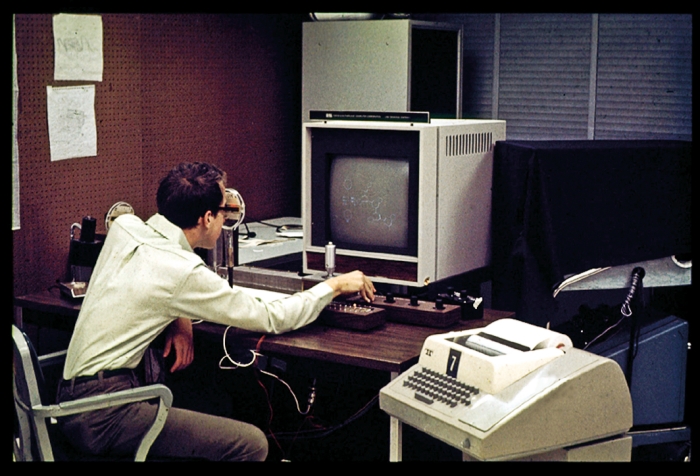

Molecular graphics pioneers, such as Robert Langridge, built computerized display systems to enable scientists to see and study the geometry, motions, and other properties of molecules in 3-D and color. Shown here is a student in the early 1970s in Langridge’s Princeton University lab (left) and a 1960s MIT lab that featured a hand-operated “crystal ball” (left of monitor) for manipulating the screen image. “The molecular model on the right was for emergency use if the computer went down,” Langridge says.

In those days, Kenneth M. Merz Jr. was a graduate student working with Michael J. S. Dewar at the University of Texas, Austin. Merz used that powerful computer and semiempirical methods to investigate how azulene rearranges to naphthalene (J. Am. Chem. Soc. 1985, DOI: 10.1021/ja00307a051). Merz, now at Michigan State University and chair of the ACS Division of Computers in Chemistry, says key steps of that calculation, which was nearly unapproachable a decade or so earlier, took a few hours of computation time and other steps required a few days. Today those same steps could be completed in less than 1 second and a few minutes, respectively, he says.

Merz is quick to point out that the vastly increased capabilities aren’t due only to hardware improvements. Innovations in handling the mathematics, chemistry, and physics that underpin computational methods deserve much of the credit, he insists.

Computations today continue to get bigger, better, and faster. High-level calculations of the 1980s that modeled classic organic reactions of naphthalene-sized compounds have given way to today’s state-of-the-art quantum mechanical treatment of multi-thousand-atom biomolecules. A case in point is a recent study led by Christian Ochsenfeld of Ludwig Maximilian University, in Munich, on a 2,025-atom protein-DNA complex that plays a key role in repairing DNA from oxidative damage (J. Chem. Phys. 2013, DOI:10.1063/1.4770502).

Similar computational advances continue to unfold in other areas. In materials chemistry, for example, researchers use computer methods to explore extremely high and selective gas uptake in the pores of metal-organic framework (MOF) compounds. University of California, Berkeley, chemist Omar M. Yaghi, a MOF specialist, explains that computations help scientists understand the nature of the molecular interactions that control gas adsorption in the chemically and structurally complex pore environment. Computations are also “beginning to point us in the right direction for optimizing properties and improving performance,” he adds.

In much the same way, computational methods are starting to lead researchers in the search for new solid catalysts. According to Stanford University’s Jens K. Nørskov, that newfound role for computation stems from its ability to examine enormous data sets and uncover predictive trends among classes of materials. Also critical is computation’s knack for identifying essential “descriptors,” which are fundamental properties, such as binding energies, that strongly and perhaps unexpectedly affect a solid’s catalytic properties. That approach led to predictions, confirmed experimentally, that low-cost MoS2, a common fuel desulfurization catalyst, should function well as a catalyst for hydrogen evolution, a reaction typically associated with expensive noble metals.

The predictive power of computations also guides researchers in designing experiments that explore the chemical properties of the heaviest elements in the periodic table. Recent experiments of that type have confirmed predictions regarding volatility, complex formation, and the oxidation state of numerous superheavy elements.

Computational methods now also play a key role in structure-based drug design. This approach aims to come up with a therapeutically beneficial compound, often a small organic molecule, with just the right shape and charge distribution to fit and bind effectively to a biomolecular target.

Not long ago, the magnitude of that type of computational problem would have made it unsolvable, says Charles H. Reynolds, president of Gfree, Doylestown, Pa. Now, he says, “structure-based drug design has become an indispensable tool in drug discovery.” It has led to numerous medications, including drugs to treat HIV/AIDS, hypertension, and various types of cancer.

It’s hard to imagine a future in which computations don’t play a key role in the chemical sciences. “Computations open totally new possibilities for people who are imaginative and let them test ideas on a timescale that until recently you couldn’t even dream about,” Nørskov says.

They also trigger novel thought processes and stimulate discussion about new research directions, in Yaghi’s view.

And besides, as Reynolds points out, computation “is just about the only area in which, year after year, the capabilities go up and the cost goes down.”

MORE ON THIS STORY

Introduction: Nine For Ninety | PDF

Chemical Connections | PDF

Antibacterial Boom And Bust | PDF

Small Science, Big Future | PDF

Understanding The Workings Of Life | PDF

Chemistry By The Numbers | PDF

Plastic Planet | PDF

The Catalysis Chronicles | PDF

Giving Chemists A Helping Hand | PDF

It's Not Easy Being Green | PDF

Readers' Favorite Stories | PDF

Nine Decades Of The Central Science | PDF

How Chemistry Changed the World | C&EN's 90th Anniversary Poster Timeline

Trying To Explain A Bond

A Toast To C&EN At 90

Join the conversation

Contact the reporter

Submit a Letter to the Editor for publication

Engage with us on Twitter