Advertisement

Grab your lab coat. Let's get started

Welcome!

Welcome!

Create an account below to get 6 C&EN articles per month, receive newsletters and more - all free.

It seems this is your first time logging in online. Please enter the following information to continue.

As an ACS member you automatically get access to this site. All we need is few more details to create your reading experience.

Not you? Sign in with a different account.

Not you? Sign in with a different account.

ERROR 1

ERROR 1

ERROR 2

ERROR 2

ERROR 2

ERROR 2

ERROR 2

Password and Confirm password must match.

If you have an ACS member number, please enter it here so we can link this account to your membership. (optional)

ERROR 2

ACS values your privacy. By submitting your information, you are gaining access to C&EN and subscribing to our weekly newsletter. We use the information you provide to make your reading experience better, and we will never sell your data to third party members.

Environment

New Tools For Risk Assessment

EPA lays out how it anticipates using computational toxicology data for regulatory decisions

by Cheryl Hogue

June 11, 2012

| A version of this story appeared in

Volume 90, Issue 24

Deployment

The Environmental Protection Agency is gearing up to use computational toxicology data in several regulatory applications including the following:

◾ Analysis of the hazards of and exposure to chemicals already on the market

◾Review of premanufacture notices for new commercial chemicals under the Toxic Substances Control Act

◾ Registration of pesticides under the Federal Insecticide, Fungicide & Rodenticide Act

◾ Estimating human exposure to unregulated contaminants found in public drinking water systems

Since 2007, researchers at the Environmental Protection Agency have been developing new methods for determining the risks that chemicals pose to people and the environment. Their efforts are focused on computational toxicology, which brings together molecular biology, chemistry, and computer science and relies on high-throughput testing methods widely used by pharmaceutical companies.

Now, EPA regulators are preparing to apply computational toxicology tools in their work. This move will have implications for companies that manufacture chemicals.

Specifically, the agency plans to incorporate computational toxicology data in regulatory decisions about commercial chemicals and pesticides. In addition, EPA expects to use the data to determine which unregulated contaminants in drinking water need greater scrutiny.

Agency officials described their plans on May 30 to a panel of EPA’s Science Advisory Board (SAB). The board’s Exposure & Human Health Committee is to provide recommendations to EPA on how the agency can advance application of computational toxicology into its hazard and risk assessments of chemicals.

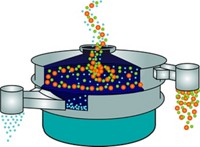

EPA’s Office of Research & Development (ORD) is creating and refining computational toxicology tools in an effort called ToxCast, which is part of its Chemical Safety for Sustainability Research program, explained Robert J. Kavlock, ORD deputy assistant administrator for science. One goal for ToxCast is generating hazard and exposure information that regulators need to make risk-based decisions about chemicals, he told the SAB panel. This effort is also aimed at reducing the use of animals in laboratory tests.

ToxCast focuses on how—rather than just whether—chemicals cause health effects, Kavlock continued. Such information is expected to eventually help EPA identify segments of the population, such as children or the elderly, that are more susceptible to the effects of chemical exposure. It will also help EPA analyze the effects from exposure to mixtures of compounds, Kavlock said.

EPA’s overarching objective is to use computational toxicology tools in ways that are rational and scientific and that conform to legal mandates in federal environmental laws, Kavlock said. Computational toxicology information won’t be used for regulatory decisions until the data meet these goals.

EPA is preparing for that shift. For instance, the agency intends to deploy computational toxicology tools to evaluate thousands of commercial chemicals on the market, said James J. Jones, EPA acting assistant administrator for chemical safety and pollution prevention. Currently, the agency can’t scrutinize as many chemicals as it wants as fast as it wants, he said. With existing analytical methods, including structure-activity relationships and traditional toxicology testing, the agency can take a close look at a maximum of perhaps 50 chemicals per year, he told the SAB panel. Computational toxicology, he said, will speed up the pace, providing “tools to more effectively and efficiently assess chemical safety.”

The agency also hopes to put computational toxicology data to work on chemicals being introduced to the market, said Jennifer Seed, deputy director of the Risk Assessment Division of EPA’s Office of Pollution Prevention & Toxics. EPA would use such information as it reviews the notices that chemical manufacturers submit to the agency before commercializing a new compound. Each year, EPA receives about 2,000 of these premanufacture notices, which are filed under the Toxic Substances Control Act, Seed said.

Using computational toxicology data would be helpful because companies are not required to provide toxicity data on new products when they file these notices, Seed said. Instead, EPA now uses primarily physical-chemical properties, such as structure-activity relationships, to determine whether to require toxicity testing or restrict the uses of a compound before the manufacturer starts selling it. Computational toxicology would add biological data to the agency’s new-chemical reviews, she said.

Computational toxicology data could also help EPA narrow the type of toxicity and other data it needs from pesticide makers to register their products, said Vicki Dellarco, senior science adviser in EPA’s Office of Pesticide Programs. Currently, pesticide manufacturers must supply a broad range of data under the Federal Insecticide, Fungicide & Rodenticide Act to obtain the EPA registration they need to sell products.

In addition, the agency hopes to use computational toxicology data to derive data on the public’s exposure to unregulated contaminants in drinking water, said Elizabeth Doyle, a scientist in EPA’s Office of Water. Today, the agency relies on monitoring of these contaminants by a few large public water utilities. Leveraging these new data would support EPA’s work under the Safe Drinking Water Act, which requires EPA to list unregulated contaminants that may require a national limit in the future. That law also requires EPA to decide periodically whether to regulate at least five of the listed contaminants.

Doyle said computational toxicology data used for decisions on unregulated drinking water contaminants need to be “solidly ground-truthed” science. This is because EPA’s decisions to list contaminants can be challenged in court, she said.

Another intended use for computational toxicology techniques by EPA is in its research on chemicals used for hydraulic fracturing, said Ila Cote, senior science adviser for EPA’s National Center for Environmental Assessment. This method of extracting natural gas from shale using water, chemicals, and sand under pressure is commonly called fracking. Cote told the science advisers that EPA will seek peer review for the methods it uses to assess these substances. David Dix, acting director of EPA’s Center for Computational Toxicology, said this research would involve analysis of hundreds of fracking chemicals.

Researchers are also continuing to build computational toxicology tools. In an effort called ExpoCast, ORD is working with academic researchers to refine computer models for estimating human exposure to chemicals found in consumer goods, Dix told the SAB panel. The agency is comparing the results of these computer-based efforts with biomonitoring data from the U.S. National Health & Nutrition Examination Survey, he said. Conducted by the Centers for Disease Control & Prevention, this survey periodically analyzes the blood and urine of U.S. residents for the presence of hundreds of chemicals.

As the SAB panel began its deliberations on EPA’s computational toxicology efforts, Richard A. Becker, senior toxicologist with the American Chemistry Council, a chemical industry group, urged members to consider several issues. One is ensuring that the new methods are scientifically validated to provide consistent, reliable results over time, he said.

A second involves computational toxicology’s focus on what are called “adverse outcome pathways,” Becker said. These are the links between an initial event at the molecular level—for example, a chemical’s interaction with a receptor such as a nuclear hormone receptor—and eventual health problems.

Data from the high-throughput automated tests could indicate that exposure to a particular chemical may cause a reversible perturbation. On the other hand, the data could indicate that the substance causes critical changes at a later step in the pathway that indicate that an adverse effect has been induced, Becker said. SAB needs to recommend that EPA ensure there is appropriate scientific confidence that these assays provide accurate information about how a chemical can affect the steps in these pathways, he said.

Finally, Becker asked the advisers to gather information on how regulators plan to use computational toxicology data. SAB should scrutinize whether there is enough confidence in these computational methods and prediction models for them to be put to EPA’s intended uses, he said. For instance, the agency’s use of this information in making enforceable regulatory decisions about chemicals would require a greater degree of scientific confidence than would use of this information to screen and prioritize chemicals for further assessment, Becker said.

The SAB panel will study the issue over the coming months before making recommendations to the agency.

Join the conversation

Contact the reporter

Submit a Letter to the Editor for publication

Engage with us on Twitter