Advertisement

Grab your lab coat. Let's get started

Welcome!

Welcome!

Create an account below to get 6 C&EN articles per month, receive newsletters and more - all free.

It seems this is your first time logging in online. Please enter the following information to continue.

As an ACS member you automatically get access to this site. All we need is few more details to create your reading experience.

Not you? Sign in with a different account.

Not you? Sign in with a different account.

ERROR 1

ERROR 1

ERROR 2

ERROR 2

ERROR 2

ERROR 2

ERROR 2

Password and Confirm password must match.

If you have an ACS member number, please enter it here so we can link this account to your membership. (optional)

ERROR 2

ACS values your privacy. By submitting your information, you are gaining access to C&EN and subscribing to our weekly newsletter. We use the information you provide to make your reading experience better, and we will never sell your data to third party members.

Environment

Perspectives: When are industries judged fairly?

In seeking to understand how people rate risks, psychologists break the big question down into many small ones

by Baruch Fischhoff, Carnegie Mellon University

December 5, 2016

| A version of this story appeared in

Volume 94, Issue 48

\

\

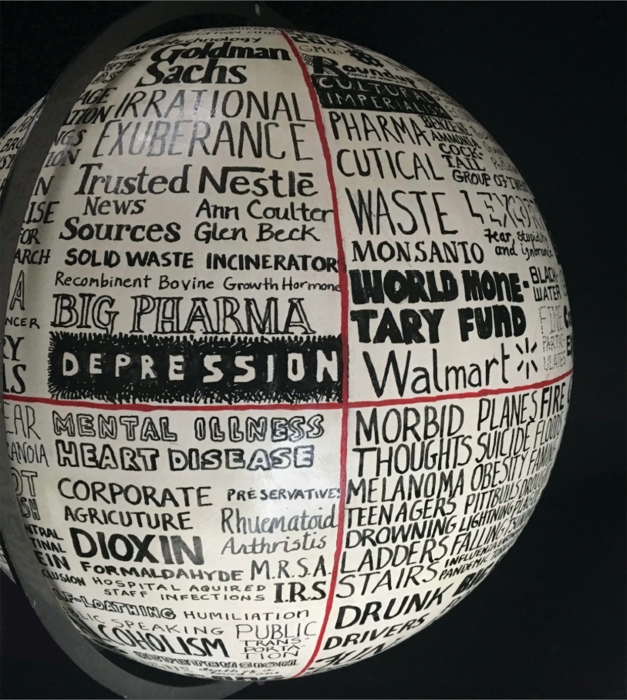

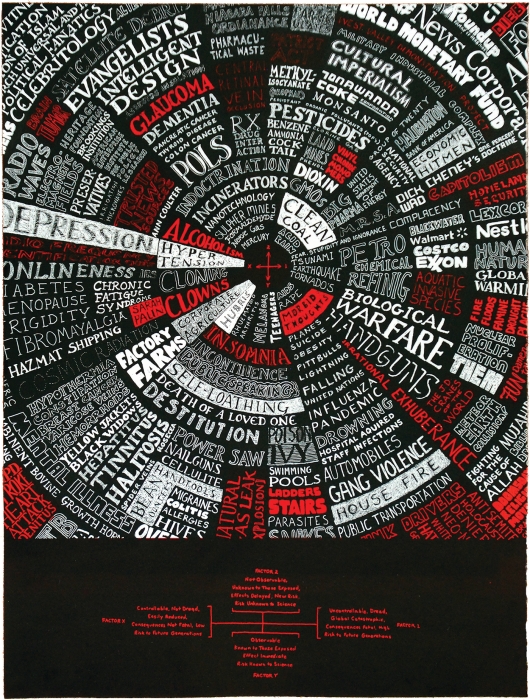

Print media artist Adele Henderson of the University at Buffalo, SUNY, has been creating a series of prints and hand-drawn globes about perceptions of risk. In this work, titled “Perception of Risk, February 2010,” Henderson used x- and y-axes to map out and visualize risks, real and/or imagined, to herself and to others.

Some 40 years ago, people from several beleaguered industries began asking me and my psychologist colleagues, Paul Slovic and Sarah Lichtenstein, a big question: “Why do some members of the public not love the technologies to which we have dedicated our lives?”

Those initial calls were primarily about the safety of civilian nuclear power and liquid natural gas. The chemical industry was not far behind and was of particular interest to me, as my father was an industrial chemist and I grew up with C&EN around the house.

The presenting symptoms that we encountered in these consultations were, in effect, “These skeptics about our industry must be crazy. You’re psychologists. Do something.” Although vaguely troubled about being confused with clinical psychologists, we cognitive psychologists saw the challenge: If we understood anything about how people make decisions, then we should have something to say.

Whether judging the risks of a chemical, a financial product, or a looming pandemic, when people lack facts, they must rely on their perceptions. As scientists, we addressed the big question of how those perceptions arise by employing the small-question tools of our field—studies designed to disentangle the complex processes shaping all behavior. Building on basic research into how people think, feel, and interact, our work revealed general patterns.

People may define risk differently. Members of the general public have been found to care more than technical experts about some aspects of risks, such as how they are distributed over people and time. Thus, members of the two groups might see two risks as having the same expected mortality but treat them differently because one of the risks falls disproportionately on a subset of the population. They also may respond differently to risks that exact the same toll one at a time or in one catastrophic event.

People are good at keeping a running mental tally of events but not at dealing with any bias in the accounting. As a result, people may exaggerate the prevalence of accidents and angry people, both of which draw disproportionate news media attention. Correcting for that bias requires first remembering that safe operations and calm citizens are the norm and then estimating how prevalent the exceptions are.

People rely on imperfect mental models. Bugs in these models can undermine otherwise sound beliefs. For example, people who know that radioactive materials can cause long-term contamination may erroneously infer that radon seeping into their homes does as well. They may not know that radon and its decay products have short half-lives, so that homes are safe once radon influx is brought under control by remediation.

People look for patterns. This is how we naturally make sense of the world. However, people sometimes see patterns in random events, as with apparent clusters of cancer, perhaps attributed to chemical exposures. They may perceive patterns in runs of good or bad luck, perhaps attributing them to superstitious sources. Some patterns become apparent only in hindsight, creating an unwarranted feeling that risks could have been seen, and avoided, in foresight.

There is good news in these results and others like them: People judge risks in sensible, imperfect, and somewhat predictable ways. The critical gaps in their knowledge are modest, such as how radon works, which events go unreported, and what other people are thinking. Moreover, the science exists for closing these gaps. Whether that science is used, though, is one of the big questions—of the sort whose answer must draw on personal experience and impressions.

My experience suggests that programs applying our science to communicating technology risks and benefits are often rewarded. For example, my colleagues and I developed a widely distributed brochure about potential negative health effects of 60-Hz electromagnetic fields (EMFs) from both high-voltage power lines and home appliances, just as the issue had begun to boil in the 1980s.

As required by our science, we first summarized the evidence relevant to lay decisions and then interviewed people about their beliefs and concerns. Finally, we tested draft communications, checking that they were interpreted as intended. Among other things, those communications addressed a common bug in lay mental models: how quickly EMFs fall off with distance. We also candidly described the limits to current evidence regarding possible harm and promised that new research results would not be hidden. It is our impression that we contributed to a measured societal response to the risk.

The EMF case had conditions necessary for securing a fair hearing for the chemical or any other industry:

▸ A good safety record. For example, public support for nuclear power rose over the long period of safe performance preceding the Fukushima accident.

▸ Talking to people. The chemical industry’s outreach programs supporting local emergency responders have enhanced trust in many communities.

\

Baruch Fischhoff is Howard Heinz University Professor in the Department of Engineering & Public Policy at Carnegie Mellon University and an expert in risk analysis, behavioral research, and decision science.

▸ A scientific approach to communication. The Consumer Financial Protection Bureau is creating disclosures designed to improve trust in banking and insurance products.

▸ Developing scientifically sound communications is not expensive. However, it requires having the relevant expertise and evaluating the work empirically. Such communication often faces three interrelated barriers among some of those responsible for its adoption:

▸ Strong intuitions about what to say and how to say it, discounting the need to consult behavioral science and evaluate communications.

▸ Distrust of the public, perhaps fed by commentators who describe the public as incapable of understanding (that is, being chemophobic).

▸ Disrespect for the social sciences as sources of durable, useful knowledge.

There is a kernel of truth underlying these barriers. People do have some insight into how other people think, the public can be unreasonable, and social scientists do sometimes oversell their results. To help outsiders be savvy consumers of behavioral research, my colleagues and I have tried to make our science more accessible, for example, through a Food & Drug Administration user guide and via Sackler Colloquia in 2012 and in 2013 on the science of science communications, with accompanying special issues of Proceedings of the National Academy of Science USA.

It is my impression that the answers to the small questions that we address in our studies can provide a positive answer to the big question: Is it possible for an industry to get a fair hearing when it comes to consumer product and technology safety?

Join the conversation

Contact the reporter

Submit a Letter to the Editor for publication

Engage with us on Twitter