Advertisement

Grab your lab coat. Let's get started

Welcome!

Welcome!

Create an account below to get 6 C&EN articles per month, receive newsletters and more - all free.

It seems this is your first time logging in online. Please enter the following information to continue.

As an ACS member you automatically get access to this site. All we need is few more details to create your reading experience.

Not you? Sign in with a different account.

Not you? Sign in with a different account.

ERROR 1

ERROR 1

ERROR 2

ERROR 2

ERROR 2

ERROR 2

ERROR 2

Password and Confirm password must match.

If you have an ACS member number, please enter it here so we can link this account to your membership. (optional)

ERROR 2

ACS values your privacy. By submitting your information, you are gaining access to C&EN and subscribing to our weekly newsletter. We use the information you provide to make your reading experience better, and we will never sell your data to third party members.

Policy

Redefining Funding

Changing funding formulas in the U.K. worry scientists

by Sarah Everts

April 27, 2009

| A version of this story appeared in

Volume 87, Issue 17

THE U.K.'S Engineering & Physical Sciences Research Council (EPSRC), which is comparable to the National Science Foundation in the U.S., announced that in April it would implement a new policy that would bar scientists from applying for grants if their past success rates were too poor. To many U.K. scientists, the idea, which came in March, seemed more like an early April Fools' Day prank than funding policy.

This policy change is just the latest notification to U.K. chemists that the way in which they are evaluated for funding dollars would be changing. The other big change they are facing involves how cash from the government's higher education funding body is distributed to universities. This change would move universities away from a peer review ranking toward a more metrics-driven process.

Under the new EPSRC policy, principal investigators (PIs) who have "three or more proposals within a two-year period ranked in the bottom half of a funding prioritization list or rejected before panel and [have] an overall personal success rate of less than 25% over the same two years" will not be allowed to apply for the agency's money for two years, either as the PI or as a co-PI.

EPSRC notes that this new policy for "persistently unsuccessful applicants" would be retroactive to 2007 and affect between 200 and 250 scientists.

To many U.K. researchers, the policy is "blacklisting, pure and simple," says Joe Sweeney, a chemist at the University of Reading.

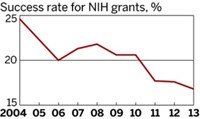

Although some U.K. chemists get money from biological and medical funding councils, most chemists rely on EPSRC grants. Chemists, in particular, will be hit by the blackout because they have lower success rates than other EPSRC applicants: In 2007 and 2008, EPSRC's overall success rate was about 30%, but for some chemistry fields, only around 15% of grant applications were funded. One reason for the low success rate is that chemists submit a higher number of smaller scale applications than scientists in other fields.

According to EPSRC, the new measures are aimed "to help alleviate pressure on all involved in our peer review process," pressure created by the large number of proposals submitted to U.K. funding agencies. The number of proposals has doubled over the past 20 years.

IN PROTEST of the policy, Sweeney initiated an online petition, which has garnered more than 1,800 names. The number of signatures is nearly 10 times more than the number of individuals who will be directly affected by the new policy. This is partly because scientists who serve as reviewers would rather not be put in the position of delivering a devastating blow to a colleague's livelihood because of the new policy.

"You are not only affecting the course of a proposal but the course of someone's career," says Tom Welton, head of chemistry at Imperial College London. "I will be far less likely to review proposals if they are a bit distant from my expertise."

EPSRC's chief press officer, David Martin, notes that the agency "has received considerable feedback" about the new policy. "We are currently looking carefully at the policy in the context of the responses," he wrote in an e-mail to C&EN.

The new EPSRC policy comes amid a larger backdrop of major reforms to the way in which U.K. university departments receive federal funding for professor salaries, infrastructure, teaching, and graduate studentships. The U.K. government, by means of a federal agency called the Higher Education Funding Council for England (HEFCE), doles out $12 billion to 130 universities and colleges across the country annually. That makes HEFCE the source of about 30–50% of a given chemistry department's total operating budget. The rest of the funding comes from grants and tuition fees, which form the basic operating budgets of most U.S. universities.

Every six years or so, HEFCE performs an extensive evaluation and ranking of university research departments across the country, called the Research Assessment Exercise (RAE), the results of which are front-page news in the U.K. The real-world impact of these evaluations can be momentous: The 2001 RAE ranking was the basis for closure of several U.K. chemistry departments.

The RAE rankings also form the basis by which the U.K. government splits the $12 billion HEFCE pot. Under this system, the rankings of individual departments combine to give an overall university value. This value is then used by HEFCE to distribute its funds to each university, which can divvy up the money however the institution's administration sees fit—perhaps choosing to boost a weak department or strengthen an already excellent one.

For decades, the rankings for every department in the entire country had been done by peer review. Representatives from each discipline are tapped to assess departments within their field, in an overall process that takes more than a year.

Departments prepare a package for the RAE committee, including extensive lists of everything from departmental equipment to student application statistics. These packages also include four citations of a given researcher's best work.

Departments could choose to forward papers from all active researchers in their unit or only their top performers, to improve RAE ranking. When final funding amounts are being determined, HEFCE divides rankings by the total number of researchers in a department—akin to the method used to calculate a grade point average—in order to normalize the process.

Those on the RAE review committees "spend three solid months reading," says Andrew Harrison, a chemist at the University of Edinburgh who is temporarily at France's Institut Laue-Langevin and was involved in the last RAE evaluation, which reported in December 2008. The chemistry reviewers spend about 15 working days to put together the final rankings, Harrison says.

THE RAE PROCESS has long been the source of grievances: There are complaints that university departments recruit top players just before RAE submissions are due so that they can boost their profile. Then there are the hard feelings and internal politics that emerge when some departments omit less-productive investigators from the portfolio sent to the RAE evaluators in order to boost rankings. Many also say that the RAE process is too lengthy and expensive.

So in 2006, "we responded to a call to make the RAE review a lower burden and simpler," says Pamela Macpherson-Barrett, a higher education policy adviser at HEFCE. The agency announced that it would change how rankings were determined by switching the RAE from a peer review process to a "metrics-based" approach. This alarmed many researchers, who worried that exchanging human reviewers for equations—that use perhaps citation indexes or summations of funding—could not result in an evaluation that properly reflects research value.

Individuals, institutions, and associations, such as the Royal Society of Chemistry, wrote letters and position papers about the proposed metrics-based evaluation. Concerns expressed included questions about how emerging but exciting scientific areas, which are not yet highly cited, would be accounted for and the reality that some research simply costs less, therefore garnering less grant income, which is not a reflection of scientific worth.

Macpherson-Barrett says HEFCE took notice of the widespread concerns, and the agency is revising its plan to use only "robust" metrics and include expert reviewers to fill the remaining gap. The council is currently doing a pilot assessment of metrics evaluations, and it plans to propose the new RAE assessment methodology in autumn of 2009, she tells C&EN.

Still, "the confluence of events has many people nervous," Welton adds, pointing to everything from the changes in RAE assessments to EPSRC's move to cut off some researchers from applying for grants. The global economic downturn, which makes industry funding less of an option, also doesn't help, he says. The U.K. government added yet more stress last month when it announced that science funding should be directed toward research that could benefit the economy.

When it comes down to it, many of the changes being proposed are about how scientists and their work are evaluated. "Scientists are humans," Welton notes, "and of course we feel strongly about how we are judged, especially when it is about something so intrinsic as our value as researchers."

Join the conversation

Contact the reporter

Submit a Letter to the Editor for publication

Engage with us on Twitter