Advertisement

Grab your lab coat. Let's get started

Welcome!

Welcome!

Create an account below to get 6 C&EN articles per month, receive newsletters and more - all free.

It seems this is your first time logging in online. Please enter the following information to continue.

As an ACS member you automatically get access to this site. All we need is few more details to create your reading experience.

Not you? Sign in with a different account.

Not you? Sign in with a different account.

ERROR 1

ERROR 1

ERROR 2

ERROR 2

ERROR 2

ERROR 2

ERROR 2

Password and Confirm password must match.

If you have an ACS member number, please enter it here so we can link this account to your membership. (optional)

ERROR 2

ACS values your privacy. By submitting your information, you are gaining access to C&EN and subscribing to our weekly newsletter. We use the information you provide to make your reading experience better, and we will never sell your data to third party members.

Computational Chemistry

Machine learning predicts electronic properties at relatively low computational cost

Algorithm aims for the accuracy of more computationally intensive approaches

by Sam Lemonick

August 9, 2018

When chemists want to model the structural and electronic properties of atoms or molecules, they often turn to a computational technique called density functional theory (DFT). For most uses, DFT can produce accurate results without needing too much computational power. When DFT fails, chemists use approaches like coupled cluster (CC) or Møller–Plesset perturbation (MP2) theories. These generate more reliable values than DFT does, but require thousands of times as much computational power as DFT, even for small molecules.

Thomas F. Miller and colleagues at California Institute of Technology now demonstrate that machine learning might be the best of both worlds—as accurate as CC or MP2 and no more costly than DFT (J. Chem. Theory Comput. 2018, DOI: 10.1021/acs.jctc.8b00636).

The researchers wanted to predict electronic structure correlation energies—a measure of interactions between electrons that helps chemists model how a molecule behaves. Their machine learning approach predicts these values based on a set of known data.

When computational chemists have previously tried to replace traditional computational techniques with machine learning algorithms, they’ve trained the system on data like the types of atoms or bond angles in a molecule. Miller’s group trained their algorithm only on localized molecular orbitals of a set of small molecules. Because molecular orbitals are agnostic to the underlying bonds and atoms, Miller says the new algorithm could predict properties for many different molecules with a small starting set of data. “Instead of having these huge numbers of machine learning variables” that increase as the system size grows, Miller says, “we can machine learn with respect to these molecular orbitals, which are pretty similar across different systems.” That makes the machine learning less complex, he adds.

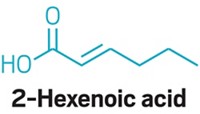

In one example, the researchers trained their algorithm on the molecular orbitals of water, then predicted the correlation energies of ammonia, methane, and hydrogen fluoride. For methane, the algorithm’s value was just 0.24% off from the one generated by CC, and that was the least accurate result of the three they found. The team’s algorithm also predicted correlation energies faster than using CC. For example, the calculation for a cluster of six water molecules took two minutes with machine learning, compared with 28 hours for CC.

The team did find examples where the algorithm fell short. Their system did poorly in predicting values for butane and isobutane after training on methane and ethane. Including propane in the training set led to more accurate results.

Miller says the same technique could be used to predict other properties for a diverse array of molecules. He hopes this technique will complement other machine learning and electronic structure techniques, not replace them. And he emphasizes that these early results are still a long way from a system that anyone could use. Others agree. “I think it’s an excellent idea, and looks promising, but it can be harder than it looks to make it a general-purpose tool,” Kieron Burke, a computational chemist at the University of California, Irvine, says.

Join the conversation

Contact the reporter

Submit a Letter to the Editor for publication

Engage with us on Twitter