Advertisement

Grab your lab coat. Let's get started

Welcome!

Welcome!

Create an account below to get 6 C&EN articles per month, receive newsletters and more - all free.

It seems this is your first time logging in online. Please enter the following information to continue.

As an ACS member you automatically get access to this site. All we need is few more details to create your reading experience.

Not you? Sign in with a different account.

Not you? Sign in with a different account.

ERROR 1

ERROR 1

ERROR 2

ERROR 2

ERROR 2

ERROR 2

ERROR 2

Password and Confirm password must match.

If you have an ACS member number, please enter it here so we can link this account to your membership. (optional)

ERROR 2

ACS values your privacy. By submitting your information, you are gaining access to C&EN and subscribing to our weekly newsletter. We use the information you provide to make your reading experience better, and we will never sell your data to third party members.

Toxicology

Big data mining predicts toxicity better than animal tests

by Britt Erickson

July 13, 2018

| A version of this story appeared in

Volume 96, Issue 29

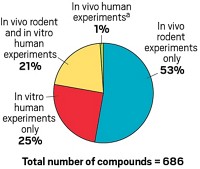

Algorithms derived from large databases of chemical structure and toxicity relationships may be better at predicting the toxicity of chemicals than individual animal tests, according to a study by scientists at Johns Hopkins Bloomberg School of Public Health (Toxicol. Sci. 2018, DOI: 10.1093/toxsci/kfy152). The study relies on the world’s largest machine-readable chemical database, created by the team two years ago. The researchers used machine-learning algorithms to read across structure and toxicity information for about 10,000 chemicals from 800,000 toxicology tests. They then created software to predict whether any given chemical is likely to cause skin irritation, DNA damage, or other toxic effects. The software was on average about 87% accurate in predicting consensus results for nine common toxicity tests that use animals. The actual animal tests averaged only about 81% accuracy, the authors report. This is “big news for toxicology,” principal investigator Thomas Hartung, a professor in the Department of Environmental Health & Engineering at the Bloomberg School, says in a press release. “These results are a real eye-opener—they suggest that we can replace many animal tests with computer-based prediction and get more reliable results.”

Join the conversation

Contact the reporter

Submit a Letter to the Editor for publication

Engage with us on Twitter