Advertisement

Grab your lab coat. Let's get started

Welcome!

Welcome!

Create an account below to get 6 C&EN articles per month, receive newsletters and more - all free.

It seems this is your first time logging in online. Please enter the following information to continue.

As an ACS member you automatically get access to this site. All we need is few more details to create your reading experience.

Not you? Sign in with a different account.

Not you? Sign in with a different account.

ERROR 1

ERROR 1

ERROR 2

ERROR 2

ERROR 2

ERROR 2

ERROR 2

Password and Confirm password must match.

If you have an ACS member number, please enter it here so we can link this account to your membership. (optional)

ERROR 2

ACS values your privacy. By submitting your information, you are gaining access to C&EN and subscribing to our weekly newsletter. We use the information you provide to make your reading experience better, and we will never sell your data to third party members.

Chemical Weapons

A toxic twist on AI for drug design

Chemistry-focused machine learning tools can be misused to design potential chemical weapons

by Laura Howes

May 10, 2022

| A version of this story appeared in

Volume 100, Issue 17

All it took was a minor edit to Collaborations Pharmaceuticals’ code. Suddenly, an algorithm for designing drugs to treat Alzheimer’s disease was suggesting thousands of chemical structures for nerve agents instead. Senior scientist Fabio Urbina was shocked. With very little effort, he’d just made a machine for designing new chemical weapons.

Chemical weapons have plagued warfare at least since the ancient Greeks used extracts of hellebore to poison the water supply of besieged cities. But since 1997, the Chemical Weapons Convention (CWC) has banned the development, production, stockpiling, and use of any chemical weapon by convention members, which include all but four countries around the globe. According to the CWC, chemical weapons are toxic chemicals or precursors intended for misuse; munitions and devices to deliver those chemicals; and equipment designed to use those munitions.

After parties to the convention agreed to destroy their chemical weapons, global stockpiles of the weapons decreased significantly and continue to do so. Despite this, bad actors and terrorist organizations still make and use chemical weapons. That means that organizations that are committed to nonproliferation need to monitor new developments in chemical and biological science and their potential implications.

As part of Spiez Laboratory’s mission to protect against nuclear, biological, and chemical threats, it organizes “convergence” conferences every 2 years to identify emerging threats to the international control of biological and chemical weapons. In preparation for a conference held last year, Cédric Invernizzi, who studies nuclear, biological, and chemical weapons control at Spiez Laboratory, contacted Collaborations Pharmaceuticals. He asked the biotech company to consider how scientists could use artificial intelligence drug-design tools, which are intended to benefit human health, for harm instead. “We invited them to present their cutting-edge work,” Invernizzi says, “but also to ask them to reflect on the potential for misuse in order to discuss the potential implications, in particular for the chemical and biological weapons conventions.”

The ease with which Collaborations Pharmaceuticals generated potential new toxic compounds raised big questions among conference goers and beyond. But while experts debate how significant the threat of AI-designed chemical weapons is, they already agree that questions around dual use—the use of something for both beneficial and harmful purposes—in chemistry need broader consideration.

Creating a credible threat

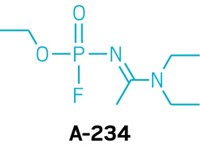

One of the chemicals listed under the strictest CWC controls is VX, a highly toxic organophosphorus nerve agent developed in the 1950s. VX is one of many nerve agents that block the enzyme acetylcholinesterase, thereby causing muscle paralysis and, without treatment, death. But acetylcholinesterase is also a target of therapeutic drugs. So Collaborations Pharmaceuticals’ Urbina and company CEO Sean Ekins realized it would take only a small change to repurpose the company’s drug-designing algorithm to favor chemicals with high toxicity scores instead of low ones.

“Oftentimes, with these generative models, we include these toxicity data sets in order to drive the molecules away from toxicity,” Urbina says. “So in a sense, we had already had the entire thing set up to go.” After changing the code to select for toxicity, Urbina let the algorithm run. He came back the next day to find his program had suggested thousands of compounds with predicted toxicities at the same level as or worse than the toxicity of VX.

Without sharing the structures of potential new chemical weapons, Urbina and Ekins presented their findings at the Spiez Convergence conference last year. Those present recall a ripple of shock going around the room. Filippa Lentzos, who studies science and international security at King’s College London, was alarmed. Plenty of chemical weapons may be out there already, she says, but “we don’t need more ways to kill people.”

On the other hand, Marc-Michael Blum, an expert in chemical weapons detection who chaired the session, believes that while the field should monitor these developments, bad actors probably won’t use AI drug-design tools this way immediately. “I see the misuse potential,” he says, “but going from there to new chemical weapons is not so trivial.”

Chemists would need to do a lot of work to develop the predicted compounds into something stable with suitable characteristics for chemical weapons–filled munitions, for instance, he says. Many existing chemical weapons already exist. Syrian government forces used chemicals like sarin or chlorine in recent years. So why, Blum says, would bad actors need more?

It may not be new compounds that lawbreakers seek, but stealthy ways to make known chemical weapons. AI tools that plan synthesis routes to make target compounds could be misused for this purpose. For example, AI tools could suggest new ways of making chemical weapons to evade detection of their production. One way to do that would be by suggesting new synthetic pathways that use precursors that aren’t on watch lists.

Gram-scale syntheses of a poison designed for assassination are trickier to detect than large-scale chemical weapons plants, however, and that’s where AI design might prove to be a bigger problem. Smaller-scale productions can slip under the radar, whether at a government facility or in small terrorist cells. Ultimately, Blum says, if people with access to the right equipment and skills want to make chemicals to kill, they can, with or without AI.

Dual-use discussions

While the AI-based design of chemical weapons might not be an imminent threat, a more critical issue for many researchers is the uncomfortable fact that they hadn’t considered the problem before. This realization highlights a gap in discussions on ethics and chemistry, especially at the convergence of multiple disciplines.

Collaborations Pharmaceuticals’ Ekins says he had never considered the dual use of his technologies before Invernizzi invited him to present at the Spiez Convergence conference. “Thinking about a bad use of the technology or the dual-use potential of software? We hadn’t thought about that, honestly.”

Ekins worked with Urbina, Lentzos, and Invernizzi to report Collaborations’ initial findings and some practical advice for others who use AI for drug design (Nat. Mach. Intell. 2022, DOI: 10.1038/s42256-022-00465-9). In particular, they propose more training so that researchers using AI in chemistry don’t overlook their work’s ethical implications.

Jeffrey Kovac is an emeritus professor at the University of Tennessee, Knoxville, who has explored various questions relating to ethics in chemistry. He thinks the ethical considerations for AI-based chemical design aren’t that different from those posed by standard chemical synthesis. “I don’t know that the AI researchers need to ask any different questions than the traditional synthetic chemist,” Kovac says. For example, all chemists should consider the environmental or security implications of their work. The problem, he says, is that chemists often fail to consider these factors at all.

“Essentially, every decision a chemist makes has a technical component and an ethical component,” Kovac says. “Chemists, however, tend to ignore the ethical component.”

There are ethical guidelines for chemists to refer to. One set is the Hague Ethical Guidelines, which were designed to support the Chemical Weapons Convention. These guidelines say that “achievements in the field of chemistry should be used to benefit humankind and protect the environment” and that chemists should always be aware of the multiple uses—including the potential misuses—of chemicals and equipment. The American Chemical Society also supported the development of the Global Chemists’ Code of Ethics, which builds on the Hague guidelines. These guidelines are available in multiple languages on the ACS website. ACS publishes C&EN.

Stefano Costanzi is a chemistry professor at American University who uses computational chemistry to understand how chemicals affect living organisms. He also works to counter the proliferation of weapons of mass destruction, especially chemical weapons. He says that these guidelines are a start, but they could be developed further with more concrete, detailed examples.

Many of those contacted for this article told C&EN that they believe strongly that the teaching of ethics cannot be limited to a single course or training session. Chemists need to integrate these questions into the curriculum and general practice.

“I made this a consideration all the time in my research,” Costanzi says. “Should I do this? Should I study this? Is it dual use? Is it something that I can publish? Is it something that I feel comfortable involving my students in? It’s something that’s always on my mind because I do study these sorts of issues. And I think that if we manage to expand this type of frame of mind to our colleagues, that is a good thing.”

AI is ultimately just a tool that can be used for good or ill. Costanzi says computational tools could be used to help enforce the CWC. For instance, he is building software that both researchers and nonspecialists like border-control agents could use to check a database to see if a given chemical is subject to controls (Pure Appl. Chem. 2022, DOI: 10.1515/pac-2021-1107). These resources would automate something that can take time even for experienced chemists and could help control the trade in precursors for chemical or conventional weapons. He also argues that the CWC should focus more on monitoring whole families of chemicals rather than listing specific subsections of those families. The poisoning of Russian opposition politician Alexei Navalny, for example, was a crime. But the specific chemical used was not subject to the strictest controls of the convention, because it had a different structure from those listed as known chemical weapons. Costanzi says that mismatch comes from the difference between the policy and scientific worlds. He’d like to see more venues for cross-disciplinary conversations to help close the gap.

Working in concert

On the same day that Urbina, Lentzos, Invernizzi, and Ekins spoke to C&EN, the team briefed the White House Office of Science and Technology Policy. They also have another article under consideration at a journal, Ekins will present to the Organisation for the Prohibition of Chemical Weapons—the organization that implements the CWC—in June, and the team is submitting abstracts to multiple scientific conferences to try to raise more awareness. Their initial experiment, they say, should act as a teachable moment for the field.

“There’s no easy fix to this,” Lentzos of King’s College London says. “This is a process.” For her, that means more consideration at every stage, “from research institutions and publishers and governments and everybody. They have their roles to play. But scientists also have a role to play here. And I think scientists have an obligation to educate themselves.”

Urbina thinks one way researchers working on AI in chemistry or biology could help is by adding ethical discussions to their research papers. “It would be wonderful if every time I read a paper in this sort of field, I could expect to see some sort of section or some couple of sentences on how it can be misused,” he says. “I think it would hopefully be almost an infection of the cultural thought. As you see this in somebody else’s paper, you start wanting to put it in your own papers.”

Meanwhile, as CEO of Collaborations Pharmaceuticals, Ekins is already thinking about how he might manage access to the company’s software to prevent bad actors from building on its experiments. “As an industry, we have to be more vigilant,” he says. “This is a growing ecosystem. . . . all of the pharmaceutical companies are engaging in this AI space. And none of them, to our knowledge, have thought about implications like this.”

Join the conversation

Contact the reporter

Submit a Letter to the Editor for publication

Engage with us on Twitter